This comprehensive guide is designed to help your organization successfully implement and adopt LaunchDarkly feature flags as part of your Center of Excellence initiative. Whether you're a program manager setting objectives or a developer implementing SDKs, this guide provides structured, step-by-step guidance to ensure successful LaunchDarkly adoption across your development teams.

What You'll Find Here

Program Management

Establish clear objectives, key results, and measurable success criteria for your LaunchDarkly implementation. This section helps program managers and leadership:

- Define OKRs aligned with business objectives

- Set up governance and best practices

- Track adoption metrics and success indicators

SDK Implementation

Technical guidance for developers implementing LaunchDarkly SDKs in their applications. This section includes:

- Preflight Checklists - Step-by-step implementation guides

- Configuration best practices

- Security and compliance considerations

- Testing and validation procedures

Getting Started

New to LaunchDarkly? Start with the Program Management section to understand the strategic approach and objectives.

Ready to implement? Jump to the SDK Preflight Checklist for hands-on technical guidance.

Looking for specific guidance? Use the search functionality to quickly find relevant information.

How to Use This Guide

This guide is structured as a progressive journey:

- Plan - Establish program objectives and success criteria

- Prepare - Complete preflight checklists for your technology stack

- Implement - Follow step-by-step SDK integration guides

- Validate - Test and verify your implementation

- Scale - Apply best practices across your organization

Each section builds upon the previous one, but you can also jump to specific topics based on your immediate needs.

Need Help?

- LaunchDarkly Documentation: launchdarkly.com/docs

- LaunchDarkly Developer Hub: developers.launchdarkly.com

- LaunchDarkly Help Center: support.launchdarkly.com

- LaunchDarkly Academy: launchdarkly.com/academy

s

Overview

This topic explains the objectives and key results for the LaunchDarkly Program. We recommend using the following guidelines when creating objectives and key results:

Objectives

Write objectives as clear, inspirational statements that describe what you want to achieve. Each objective should be:

- Measurable through specific key results

- Aligned with broader program goals

- Reviewed and updated regularly to reflect changing priorities

Limit each objective to three to five key results.

Key results

Key results measure progress toward your objective. They must be specific, measurable, and verifiable.

Use quantitative metrics:

- State exact numbers, percentages, or timeframes

- Define what you measure and how you measure it

- Include baseline values when comparing improvements

- Specify the time period for measurement

Focus on outcomes:

- Measure results, not activities

- Track what changes, not what you do

- Use leading indicators that predict success

- Include lagging indicators that confirm achievement

Ensure clarity:

- Use clear language that anyone can understand

- Avoid vague terms like "improve" or "better"

- Include specific targets or thresholds

- Define success criteria explicitly

Set realistic targets:

- Aim for 70% to 80% achievement probability

- Set stretch goals that require effort to reach

- Review historical performance to inform targets

Include time-bound elements:

- Set clear deadlines or timeframes

- Define measurement periods

- Align timing with objective review cycles

Program OKRs

Objective: Application teams onboard quickly and correctly with minimal support

Key Results

- Time to provision and access LaunchDarkly with correct permissions is less than one day per team

- Less than three hours of ad hoc support requested per team during onboarding

- Less than five support tickets created per team during onboarding

- All critical onboarding tasks complete and first application live in a customer-facing environment within two sprints

Tasks

To achieve this objective, complete the following tasks:

- Create self-service documentation with step-by-step guides and video tutorials when necessary

- Configure single sign-on (SSO) and identity provider (IdP) integration

- Define teams and member mapping

- Assign all flag lifecycle related actions to at least one member in each project

- Define how SDK credentials are stored and made available to the application

- Create application onboarding checklist and a method to track completion across teams

Objective: Team members find flags related to their current task unambiguously

Key Results

- More than 95% of new flags created per quarter comply with naming convention

- More than 95% of active users access dashboard filters and shortcuts at least once per month

- More than 95% of new flags created per quarter include descriptions with at least 20 characters and at least one tag

- More than 95% of release flags created per quarter link to a Jira ticket

- Zero incidents of incorrect flag changes due to ambiguity per quarter

Tasks

To achieve this objective, complete the following tasks:

- Create naming convention document

- Document flag use cases and when and when not to use flags

- Create a method to track compliance with naming convention

- Enforce approvals in critical environments

Objective: Team members de-risk releases consistently

Key Results

- Starting Q1 2026, 90% of features requiring subject matter expert (SME) testing released per quarter are behind feature flags

- More than 75% of P1/P2 incidents related to new features per quarter are remediated without new deploys

- Mean time to repair (MTTR) reduced by 50% compared to baseline for issues related to new features by end of Q2 2026

Tasks

To achieve this objective, complete the following tasks:

- Define and document release strategies:

- Who does what when

- How to implement in the platform using targeting rules and release pipelines

- How to implement in code

- Define and document incident response strategies

- Integrate with software development lifecycle (SDLC) tooling

- Enable project management

- Enable communication

- Enable observability and application performance monitoring (APM)

- Enable governance and change control

Objective: LaunchDarkly usage is sustainable with minimal flag-related technical debt

Key Results

- More than 95% of active flags have a documented owner at any point in time

- More than 95% of active flags have an up-to-date description and tags and comply with naming conventions at any point in time

- Median time to archive feature flags after release is less than 12 weeks

- 100% of flags older than six months are reviewed quarterly

- Flag cleanup service level agreements (SLAs) are established and followed for 100% of projects

Tasks

To achieve this objective, complete the following tasks:

- Implement actionable dashboards to visualize flag status including new, active, stale, and launched

- Define flag archival and cleanup policies

- Implement code references integration

SDK Preflight Checklist

Audience: Developers who are implementing the LaunchDarkly SDKs

Init and Config

- SDK is initialized once as a singleton early in the application's lifecycle

- Application does not block indefinitely for initialization

- SDK configuration integrated with existing configuration/secrets management

Client-side SDKs

Browser SDKs

Mobile SDKs

Serverless functions

Using Flags

- Define context kinds and attributes

- Define and document fallback strategy

-

Use

variation/variationDetail, notallFlags/allFlagsStatefor evaluation - Flags are evaluated only where a change of behavior is exposed

- The behavior changes are encapsulated and well-scoped

- Subscribe to flag changes

Init and Config

Baseline Recommendations

This table shows baseline recommendations for SDK initialization and configuration:

| Area | Recommendation | Notes |

|---|---|---|

| Client-side init timeout | 100–500 ms | Don't block UI. Render with fallbacks or bootstrap. |

| Server-side init timeout | 1–5 s | Keep short for startup. Continue with fallback values after timeout. |

| Private attributes | Configured | Redact PII. Consider allAttributesPrivate where appropriate. |

| Javascript SDK Bootstrapping | localStorage | Reuse cached values between sessions. |

SDK is initialized once as a singleton early in the application's lifecycle

Applies to: All SDKs

Prevent duplicate connections, conserve resources, and ensure consistent caching/telemetry.

Implementation

- MUST Expose exactly one

ldClientper process/tab via a shared module/DI container (root provider in React). - SHOULD Make init idempotent: reuse the existing client if already created.

- SHOULD Close the client cleanly on shutdown. In serverless, create the client outside the handler for container reuse.

- NICE-TO-HAVE Emit a single startup log summarizing effective LD config (redacted).

Validation

- Pass if metrics/inspector show one stream connection per process/tab.

- Pass if event volume and resource usage do not scale with repeated imports/renders.

Application does not block indefinitely for initialization

Applies to: All SDKs

A LaunchDarkly SDK is initialized when it connects to the service and is ready to evaluate flags. If variation is called before initialization, the SDK returns the fallback value you provide. Do not block your app indefinitely while waiting for initialization. The SDK continues connecting in the background. Calls to variation always return the most recent flag value.

Implementation

- MUST Set an initialization timeout

- Client-side: 100–500 ms.

- Server-side: 1–5 s.

- SHOULD Race initialization against a timer if using an SDK that lacks a native timeout parameter.

- MAY Render/serve using bootstrapped or fallback values, then update when flags are ready.

- MAY Subscribe to change events to proactively respond to flag updates.

- MAY Configure a persistent data store to avoid fallback values in the event that the SDK is unable to connect to LaunchDarkly services.

Validation

- Pass if with endpoints blocked the app renders using fallbacks or bootstrapped values within the configured timeout.

- Pass if restoring connectivity updates values without a restart.

How to emulate:

- Point streaming/base/polling URIs to an invalid host

- Block the SDK domains in the container or host running the tests: stream.launchdarkly.com, sdk.launchdarkly.com, clientsdk.launchdarkly.com, app.launchdarkly.com

- In browsers, block

clientstream.launchdarkly.com,clientsdk.launchdarkly.com, and/orapp.launchdarkly.comin DevTools.

SDK configuration integrated with existing configuration/secrets management

Applies to: All SDKs

Use your existing configuration pipeline so LD settings are centrally managed and consistent across environments. Avoid requiring code changes setting common SDK options.

Implementation

- MUST Load SDK credentials from existing configuration/secrets management system.

- MUST NOT Expose the server-side SDK Key to client applications

- SHOULD Use configuration management system to set common SDK configuration options such as:

- HTTP Proxy settings

- Log verbosity

- Enabling/disabling events in integration testing or load testing environments

- Private attribute

Validation

- Pass if rotating the SDK key in the vault results in successful rollout and the old key is revoked.

- Pass if a repository scan finds no SDK keys or environment IDs committed.

- Pass if startup logs (redacted) show expected config per environment and egress connectivity succeeds with 200/OK or open stream.

Bootstrapping strategy defined and implemented

Applies to: JS Client-Side SDK in browsers, React SDK, Vue SDK

Prevent UI flicker by rendering with known values before the SDK connects and retrieves flags.

Implementation

- SHOULD Enable

bootstrap: 'localStorage'for SPAs/PWAs to reuse cached values between sessions. - SHOULD For SSR or static HTML, embed a server-generated flags JSON and pass to the client SDK at init.

- MUST Document which strategy each app uses and when caches expire.

- SHOULD Reconcile bootstrapped values with live updates and re-render when differences appear.

Validation

- Pass if under offline/slow network the first paint uses bootstrapped values with no visible flash of wrong content.

- Pass if clearing storage falls back to safe defaults and live updates correct the UI on reconnect.

- Pass if evaluations are recorded after successful initialization.

Client-side SDKs

The following items apply to all client-side and mobile SDKs.

Application does not block on identify

Calls to identify return a promise that resolves when flags for the new context have been retrieved. In many applications, using the existing flags is acceptable and preferable to blocking in a situation where flags cannot be retrieved.

Implementation

- MAY Continue without waiting for the promise to resolve

- SHOULD Implement a timeout when identify is called

Validation

- Pass The application is able to function after calling identify while the SDK domains are blocked: clientsdk.launchdarkly.com or app.launchdarkly.com

Application does not rapidly call identify

In mobile and client-side SDKs, identify results in a network call to the evaluation endpoint. Make calls to identify sparingly. For example:

Good times to call identify:

- During a state transition from unauthenticated to authenticated

- When a attribute of a context changes

- When switching users

Bad times to call identify:

- To implement a

currentTimeattribute in your context that updates every second - Implementing contexts that appear multiple times in a page such as per-product

Browser SDKs

The following items apply only to the following SDKs:

- Javascript Client SDK

- React SDK

- Vue SDK

Send events only for variation

Avoid sending spurious events when allFlags is called. Sending evaluation events for allFlags will cause flags to never report as stale and may cause inaccuracies in guarded rollouts and experiments with false impressions.

Implementation

- MUST Set

sendEventsOnlyForVariation: truein the SDK options

Validation

- Pass calls to allFlags do not generate evaluation/summary events

Bootstrapping strategy defined and implemented

Prevent UI flicker by rendering with known values before the SDK connects and retrieves flags. To learn more about bootstrapping, read Bootstrapping.

Implementation

- SHOULD Enable

bootstrap: 'localStorage'or bootstrap from a server-side-SDK

Validation

- Pass if under offline/slow network the first paint uses bootstrapped values with no visible flash of wrong content.

Mobile SDKs

The following items apply to mobile SDKs.

Configure application identifier

The Mobile SDKs automatically capture device and application metadata. To learn more about automatic environment attributes, read Automatic Environment Attributes.

We recommend that you set the application identifier to a different value for each separately distributed software binary.

For example, suppose you have two mobile apps, one for iOS and one for Android. If you set the application identifier to “example-app” and the version to “1.0” in both SDKs, then when you create a flag targeting rule based only on application information, the flag will target both the iOS and Android application. This may not be what you intend.

We recommend using different application identifiers in this situation, for instance, by setting “example-app-ios” and “example-app-android” in your application metadata configuration.

Implementation

- MUST Configure the application identifier in the SDK configuration to a unique value for each platform

Validation

- Pass Separate applications appear in the LaunchDarkly dashboard for each platform, for example android, ios, etc.

Serverless functions

The following applies to SDKs running in serverless environments such as AWS Lambda, Azure Functions, and Google Cloud Functions.

Initialize the SDK outside of the handler

Many serverless environments re-use execution environments for many invocations of the same function. This means that you must initialize the SDK outside of the handler to avoid duplicate connections and resource usage.

Implementation

- MUST Initialize the SDK outside of the function handler

- MUST NOT Close the SDK in the handler

Leverage LD Relay to reduce initialization latency

Serverless functions spawn many instances in order to handle concurrent requests. LD Relay can be deployed in order to reduce outgoing network connections, reduce outbound traffic and reduce initialization latency.

Implementation

- SHOULD Deploy LD Relay in the same region as the serverless function

- SHOULD Configure LD Relay as an event forwarder and configure the SDK's event URI to point to LD Relay

- SHOULD Configure the SDK in proxy mode or daemon mode instead of connecting directly to LaunchDarkly

- MAY Call flush at the end of invocation to ensure all events are sent

- MAY Call flush/close when the runtime is being permanently terminated in environments that support this signal. Lambda does provide this signal to functions themselves, only extensions.

Consider daemon mode if you have a particularly large initialization payload and only need a couple of flags for the function.

Using Flags

Define context kinds and attributes

Choose context kinds/attributes that enable safe targeting, deterministic rollouts, and cross-service alignment.

Implementation

- MUST Define context kinds, for example

user,organization, ordevice. Use multi-contexts when both person and account matter. - MUST NOT Derive context keys from PII, secrets or other sensitive data.

- MUST Mark sensitive attributes as private. Context Keys cannot be private

- SHOULD Use keys that are unique, opaque, and high-entropy

- SHOULD Document the source/type for all attributes. Normalize formats, for example ISO country codes.

- SHOULD Provide shared mapping utilities to transform domain objects → LaunchDarkly contexts consistently across services.

- SHOULD Avoid targeting on sensitive information or secrets.

Validation

- Pass if a 50/50 rollout yields consistent allocations across services for the same context.

- Pass if sample contexts evaluated in a harness match expected targets/segments.

- Pass if a PII audit finds no PII in keys and private attributes are redacted in events.

- Pass if applications create/define contexts consistently across services

Define and document fallback strategy

Every flag must specify a safe fallback value that is used when the flag is unavailable. For more information on fallback values, read Maintaining fallback values.

Implementation

- MUST Pass the fallback value as the last argument to

variation()/variationDetail()with correct types. - MUST Define a strategy for determining when to audit and update fallback values.

- MUST Implement automated tests to validate the application is able to function in an at most degraded state when flags are unavailable.

Validation

- Pass if blocking SDK network causes the application to use the fallback path safely with no critical errors.

Use variation/variationDetail, not allFlags/allFlagsState for evaluation

Direct evaluation emits accurate usage events required for flag statuses, experiments, and rollout tracking.

Implementation

- MUST Call

variation()/variationDetail()at the decision point - MUST NOT Implement an additional layer of caching for calls to variation that would prevent accurate flag evaluation telemetry from being generated

Validation

- Pass accurate flag evaluation data is shown in the Flag Monitoring dashboard

Flags are evaluated only where a change of behavior is exposed

Generate evaluation events only when a change in behavior is exposed to the end user. This ensures that features such as experimentation and guarded rollouts function correctly.

Implementation

- MUST Evaluate flags only when the value is used

- SHOULD Evaluate flags as close to the decision point as possible

The behavior changes are encapsulated and well-scoped

Isolate new vs. old logic to ease future cleanup. A rule of thumb is never store the result of a boolean flags in a variable. This ensures that the behavior impacted by the flag is fully contained within the branches of the if statement.

Implementation

- SHOULD Place new/old logic in separate functions/components; avoid mixed branches.

- SHOULD Evaluate the flag inside the decision point (

if) to simplify later removal.

// Example: evaluation scoped to the component

export function CheckoutPage() {

if (ldClient.variation('enable-new-checkout', false)) {

return <NewCheckoutComponent />;

}

return <LegacyCheckoutComponent />;

}

Subscribe to flag changes

In applications with a UI or server-side use-cases where you need to respond to a flag change, use the update/change events to update the state of the application.

Implementation

- SHOULD Use the subscription mechanism provided by the SDK to respond to updates. To learn more about subscribing to flag changes, read Subscribing to flag changes.

- SHOULD Unregister temporary handlers to avoid memory leaks.

Validation

- Pass if the application responds to flag changes

This topic covers strategies for making your application resilient when feature flags are unavailable due to network partitions or outages.

Application Resilience Goals

Design your application to be resilient even if LaunchDarkly or the network is degraded. Your application must:

- Start successfully even if the SDK cannot connect during initialization

- Function in at most a degraded state when LaunchDarkly's service is unavailable

A degraded state is acceptable when new features are temporarily disabled or optimizations are bypassed. A degraded state is not acceptable when core functionality breaks, errors occur, or user data is corrupted.

Focus Areas

Resiliency can be achieved through SDK implementation and via external infrastructure components such as LD Relay. This section covers both strategies and their tradeoffs. Our general recommendation is to start with SDK implementation and only add LD Relay if you have a specific use case that requires it.

Future Improvements

LaunchDarkly's ongoing work in Feature Delivery v2 (FDv2) focuses on strengthening SDK and service capabilities so customers can achieve true resilience without depending on additional infrastructure components.

Key Concepts

This section defines the fundamental concepts and metrics used when designing resilient LaunchDarkly integrations.

Initialization

Initialization is the process by which a LaunchDarkly SDK establishes a connection to LaunchDarkly's service and retrieves the current flag rules for your environment. During initialization, the SDK performs these steps:

- Establishes a connection to LaunchDarkly's streaming or polling endpoints

- Retrieves all flag definitions and rules for your environment

- Stores these rules in an in-memory cache

- Begins listening for real-time updates to flag rules

Until initialization completes, the SDK cannot evaluate flags using the latest rules from LaunchDarkly. If a flag evaluation is requested before initialization completes, the SDK returns the fallback value you provide.

Initialization is a one-time process that occurs when the SDK client is first created. After initialization, the SDK maintains a persistent connection in streaming mode or periodically polls in polling mode to receive updates to flag rules.

Fallback/Default Values

Fallback values, also called default values, are the values your application provides to the SDK when calling variation() or variationDetail(). These values are returned by the SDK when:

- The SDK has not yet initialized

- The SDK cannot connect to LaunchDarkly's service

- A flag does not exist or has been deleted

- The SDK is in offline mode

Fallback values are defined in your application code and represent the safe, default behavior your application should exhibit when flag data is unavailable. These values ensure your application continues to function even when LaunchDarkly's service is unreachable.

Critical principle: Every flag evaluation must provide a fallback value that represents a safe, degraded state for your application. Never assume flag data is always available.

Key Metrics

Understanding these metrics helps you measure and improve the resilience of your LaunchDarkly integration.

Initialization Availability

Initialization Availability measures the period where an SDK is able to successfully retrieve at worst stale flags.

High initialization availability means your application will rarely see fallback values served.

Low initialization availability means your application frequently starts without flag data and must rely entirely on fallback values, potentially leading to degraded functionality.

Initialization Latency

Initialization Latency measures the time between creating an SDK client instance and when it successfully retrieves flag rules and is ready to evaluate flags.

This metric is critical for:

- Application startup time - Long initialization latency can delay application readiness

- User experience - Client-side applications may show incorrect UI if initialization takes too long

- Serverless cold starts - High latency can impact function execution time

Best practices aim to minimize initialization latency through:

- Non-blocking initialization with appropriate timeouts

- Bootstrapping strategies for client-side SDKs

- Relay Proxy deployment for serverless and high-scale environments

Evaluation Latency

Evaluation Latency measures the time it takes for the SDK to evaluate a flag and return a value after variation() is called.

This metric is typically very low, under 1ms, because:

- Flag rules are cached in memory after initialization

- Evaluation is a local computation that does not require network calls

- The SDK uses efficient in-memory data structures

Evaluation latency can increase if:

- The SDK is evaluating many flags simultaneously under high load

- Flag rules are extremely complex with many targeting rules or large segments

- The SDK is using external stores like Redis that introduce network latency

Update Propagation Latency

Update Propagation Latency measures the time between when a flag change is made in the LaunchDarkly UI and when that change is reflected in SDK evaluations.

This metric is important for:

- Real-time feature rollouts - Understanding how quickly changes reach your applications

- Emergency rollbacks - Knowing how quickly you can disable a feature across all instances

- Consistency requirements - Ensuring multiple services see flag changes at roughly the same time

Update propagation latency depends on:

- SDK mode - Streaming mode provides near-instant updates typically under 200ms, while polling mode adds delay based on polling interval

- Network conditions - Latency between your infrastructure and LaunchDarkly's service

- Relay Proxy configuration - Additional hop when using Relay Proxy, usually minimal

- Geographic distribution - Applications in different regions may receive updates at slightly different times

In streaming mode, updates typically propagate in under 200ms. In polling mode, updates propagate within the configured polling interval, typically 30-60 seconds.

SDK Implementation

This guide explains how to implement LaunchDarkly SDKs for maximum resilience and minimal latency. Following these practices ensures your application can start reliably and function in a degraded state when LaunchDarkly's service is unavailable.

Key Points

-

The SDK automatically retries connectivity in the background - Even if initialization timeout was exceeded, the SDK continues attempting to establish connectivity and update flag rules automatically.

-

You do not need to manually manage connections or implement your own cache on top of the SDK - The SDK handles all connection management and caching automatically.

-

Fallback values are only served when the SDK hasn't initialized or the flag doesn't exist - Once initialization completes, the SDK uses cached flag rules for all evaluations. Fallback values are returned only during the initial connection period or when a flag key is invalid.

-

You can subscribe to flag updates to update application state - Most SDKs provide a mechanism to fire a callback when flag rules change. Use this to have your application react to updates when connectivity is restored instead of waiting for the next

variationcall. This is common in Single-Page Applications and Mobile Applications.

Preflight Checklist Items for Application Latency

The following items from the SDK Preflight Checklist are particularly important for improving application latency and resilience:

-

Application does not block on initialization - Set timeouts: 100-500ms client-side, 1-5s server-side. Prevents extended startup delays.

-

Bootstrapping strategy defined and implemented - For client-side SDKs. Provides flag values immediately on page load, eliminating initialization delay for first paint.

-

SDK is initialized once as a singleton early in the application's lifecycle - Prevents duplicate connections and ensures efficient resource usage.

-

Define and document fallback strategy - Every flag evaluation must provide a safe fallback value. Enables immediate evaluation without waiting for initialization.

-

Use

variation/variationDetail, notallFlags/allFlagsStatefor evaluation - Direct evaluation is faster and provides better telemetry. -

Leverage LD Relay to reduce initialization latency - For serverless functions and high-scale applications. Reduces initialization time from hundreds to tens of milliseconds.

-

Initialize the SDK outside of the handler - For serverless functions. Allows container reuse, eliminating initialization latency for warm invocations.

Currently in Early Access, Data Saving Mode introduces several key changes to the LaunchDarkly data system that greatly improves resilience to outages. To learn more, read Data Saving Mode in the LaunchDarkly documentation.

Initialization Caching

In data saving mode, the SDK will poll for initialization and subsequently open a streaming connection to receive realtime flag configuration changes. We can achieve high initialization availability by adding a caching proxy between the SDK and the polling endpoint while leaving the direct connection to the streaming endpoint in place.

The standard datasystem will allow for falling back to polling when streaming is not available.

Maintaining fallback values

Fallback values are critical to application resilience. They ensure your application continues functioning when LaunchDarkly's service is unavailable or flag data cannot be retrieved. However, fallback values can become stale over time, leading to incorrect behavior during outages. This guide explains how to choose appropriate fallback values and maintain them effectively.

Choosing Fallback Values

The fallback value you choose depends on the risk and impact of the feature being unavailable. Consider these strategies:

Failing Closed

Definition: Turn off the feature when flag data is unavailable.

When to use:

- New features that haven't been fully validated

- Features that have not been released to all users

- Features that could introduce significant load or stability issues if released to everyone at once

Example:

// New checkout flow - fail closed if flag unavailable

const useNewCheckout = ldClient.variation('new-checkout-flow', false);

if (useNewCheckout) {

return <NewCheckoutComponent />;

}

return <LegacyCheckoutComponent />;

Failing Open

Definition: Enable the feature for everyone when flag data is unavailable.

When to use:

- Features that have been generally available, also known as GA, for a while and are stable

- Circuit breakers/operational flags where the on state is the norm

- Features that would have significant impact if disabled for everyone at once

Example:

// Enable caching when flag is unavailable. Failing closed would cause a significant performance degradation.

const enableCache = ldClient.variation('enable-caching', true);

For temporary flags that we intend to remove, consider cleaning up and archiving the flag instead of updating the fallback value to true.

Dynamic Fallback Values

Definition: Implement logic to provide different fallback values to different users based on context.

When to use:

- Configuration/operational flags that override values from another source (environment variables, configuration, etc.)

- Advanced scenarios requiring sophisticated fallback logic

Example:

function getRateLimit(request: RequestContext): number {

// dynamic rate limit based on the request method

return ldclient.variation('config-rate-limit', request, request.method === 'GET' ? 100 : 10)

}

Methods for Maintaining Fallback Values

Fallback values can become stale as flags evolve. Use these methods to ensure fallback values remain accurate and up-to-date:

Create a Formal Process

Establish a formal process for defining and updating fallback values:

Process steps:

- Define fallback values at flag creation - Require fallback values when creating flags

- Document fallback strategy - Document why each fallback value was chosen (failing closed vs. failing open)

- Review fallback values during flag lifecycle - Review fallback values when:

- Flags are promoted from development to production

- Flags are modified or targeting rules change

- Flags are deprecated or removed

- Update fallback values as flags mature - Update fallback values when flags become GA or stable

- Test fallback values - Include fallback value testing in your testing strategy

Documentation template:

Flag: new-checkout-flow

Fallback value: false - failing closed

Rationale: New feature not yet validated in production. Safer to use legacy checkout during outages.

Review date: 2024-01-15

Next review: When flag reaches 50% rollout

Automated Reports on Fallback Values

Use automated reporting to identify stale or incorrect fallback values:

Runtime Monitoring (Data Export or SDK Hooks)

Monitor fallback value usage in real-time:

Data Export:

- Export evaluation events to your data warehouse

- Feature events expose the fallback value as the

defaultproperty on the event - Compare fallback values to current flag state and desired behavior

SDK Hooks:

- Implement a before evaluation hook

- Record the fallback value for each evaluation in a telemetry system

- Compare fallback values to current flag state and desired behavior

Example SDK Hook:

class FallbackMonitoringHook implements Hook {

beforeEvaluation(seriesContext: EvaluationSeriesContext, data: EvaluationSeriesData) {

// Always log the fallback value being used

this.logFallbackValue(

seriesContext.flagKey,

seriesContext.defaultValue,

);

return data;

}

}

API-Based Reporting

Use the LaunchDarkly API to generate reports on fallback values:

Example: This fallback-report script demonstrates how to:

- Retrieve flag definitions from the LaunchDarkly API

- Compare flag fallthrough/off variations with fallback values in code

- Generate reports identifying mismatches

Use cases:

- Scheduled reports comparing flag definitions with code fallback values

- CI/CD integration to detect fallback value mismatches

- Periodic audits of fallback value accuracy

Note: This approach relies on telemetry from SDKs generated when variation/variationDetail are called. The API only reports one fallback value and cannot reliably handle situations where different fallback values are used for different users or applications.

Static Analysis and AI Tools

Use static analysis or AI tools to analyze fallback values:

Static analysis:

- Scan codebases for

variation()calls - Extract fallback values from source code

- Compare with flag definitions

AI tools:

- Use AI to analyze code and suggest fallback value updates. You can find an example prompt in the LaunchDarkly Labs Agent Prompts repository.

- Identify patterns in fallback value usage

- Generate recommendations based on flag lifecycle stage

- Use

ldclior the LaunchDarkly MCP to enable the agent to compare fallback values to flag definitions

Centralize Fallback Management

Centralize fallback value management to ensure consistency and simplify updates:

Wrapper Functions

Create wrapper functions around variation() and variationDetail() that load fallback values from a centralized configuration:

Example:

// fallback-config.json

{

"new-checkout-flow": false,

"cache-optimization-v2": true,

"experimental-feature": false

}

// wrapper.ts

import fallbackConfig from './fallback-config.json';

export function variationWithFallback(

client: LDClient,

flagKey: string,

context: LDContext

): LDEvaluationDetail {

let fallbackValue = fallbackConfig[flagKey];

if (fallbackValue === undefined) {

// you may want to make this an error in preproduction environments to catch missing fallback values early

console.warn(`No fallback value defined for flag: ${flagKey}`);

// you can use naming convention or other logic to determine a default fallback value

// for example, release flags may default to failing closed

if (flagKey.startsWith('release-')) {

fallbackValue = false;

}

}

return client.variationDetail(flagKey, context, fallbackValue);

}

Benefits:

- Single source of truth for fallback values

- Easier to automate fallback value updates

- Logic can be shared across applications and services

Tradeoffs:

- Difficult to implement dynamic fallback values (e.g., different fallback values for different users or applications)

- Loss of locality: fallback values are no longer present in the variation call and require checking the fallback definition file

Automatic Fallback Updates During Build

Automatically update fallback values during your build process using flag fallthrough or off variations:

Process:

- During build, query LaunchDarkly API for flag definitions

- Extract fallthrough or off variations for each flag

- Update fallback configuration files with current values

- Validate that fallback values match expected types

Example build script:

#!/bin/bash

# Update fallback values from LaunchDarkly flags

curl -H "Authorization: ${LD_API_KEY}" \

"https://app.launchdarkly.com/api/v2/flags/${PROJECT_KEY}" \

| jq '.items[] | {key: .key, fallback: .environments.production.fallthrough.variation}' \

> fallback-config.json

Benefits:

- Ensures fallback values match current flag definitions

- Reduces manual maintenance overhead

- Catches drift between code and flag definitions

- Supports automated flag lifecycle management

Considerations:

- Additional logic may be required to determine which value to use. For example, if the flag is off, you may use the off variation

- Ensure your team is aware of how fallback values are generated from targeting state

LD Relay

The LaunchDarkly Relay Proxy (LD Relay) can be deployed in your infrastructure to provide a local endpoint for SDKs, reducing outbound connections and potentially improving initialization availability. However, LD Relay introduces operational complexity and new failure modes that must be carefully managed.

Risks and Operational Burden

Using LD Relay introduces:

- Additional infrastructure: More services to deploy, monitor, scale, and secure

- Resource constraints: Insufficiently provisioned relay instances can become bottlenecks or points of failure

- Maintenance overhead: Your team must handle responsibilities previously managed by LaunchDarkly's platform

Operationalizing LD Relay Properly

Deploy Highly Available Infrastructure

Load balancer:

- Implement a highly available internal load balancer as the entry point for all flag delivery traffic

- If the load balancer is not highly available, it becomes a single point of failure

- Support routing to LaunchDarkly's primary streaming network and LD Relay instances

Relay instances:

- Deploy multiple Relay Proxy instances across different availability zones

- Ensure each instance is properly sized and monitored

- Implement health checks and automatic failover

Persistent Storage

LD Relay can operate with or without persistent storage. Each approach has different tradeoffs:

With persistent storage such as Redis:

- Benefits:

- Enables scaling LD Relay instances during outages

- Allows restarting LD Relay instances without losing flag data

- Provides durable cache that survives Relay Proxy restarts

- Tradeoffs:

- Increases operational complexity. Additional service to manage.

- Requires configuring infinite cache TTLs to prevent lower availability and incorrect evaluations

- Prevents using AutoConfig. AutoConfig requires in-memory only operation.

- Additional monitoring and alerting requirements for cache health

Without persistent storage, in-memory only:

- Benefits:

- Simpler architecture with fewer components to manage

- Supports AutoConfig for dynamic environment configuration

- Lower operational overhead

- Tradeoffs:

- Relies on LD Relay cluster being able to service production traffic without restarting or adding instances during outages

- Lost cache on Relay Proxy restart. Requires re-initialization from LaunchDarkly's service.

- Must ensure sufficient capacity and redundancy to handle outages without scaling

Monitor and Alert

Key metrics to monitor:

- Initialization latency and errors

- CPU/Memory utilization

- Network utilization

- Persistent store availability

When to Use LD Relay for Improved Initialization Availability

LD Relay can improve initialization availability in these specific scenarios:

Frequent Deployments or Restarts

Use LD Relay when: You deploy or restart services frequently, at least once per day.

Why: Frequent restarts mean frequent SDK initializations. LD Relay reduces initialization latency and provides cached flag data even if LaunchDarkly's service is temporarily unavailable during a restart window.

Example scenarios:

- Kubernetes deployments with rolling restarts

- Serverless functions with frequent cold starts

- Containers that restart frequently for configuration updates

Critical Consistency Requirements

Use LD Relay when: Multiple services or instances must evaluate flags consistently, even during short outages of initialization availability.

Why: LD Relay provides a shared cache that multiple SDK instances can use, ensuring consistent flag evaluations across services even when LaunchDarkly's service is temporarily unavailable.

Example scenarios:

- Microservices that must all evaluate the same flag consistently

- Multi-region deployments requiring consistent feature rollouts

- Applications where inconsistent flag evaluations cause data corruption or business logic errors

High Impact of Fallback Values

Use LD Relay when: Fallback values cause significant business impact, such as loss of business, not just degraded UX.

Why: When fallback values cause significant business impact such as payment processing failures, data loss, or compliance violations, LD Relay provides cached flag data to avoid serving fallbacks.

Example scenarios:

- Payment processing systems where fallback values cause transaction failures

- Compliance-critical features where fallback values violate regulations

- Safety-critical systems where degraded functionality is unacceptable

Additional information

For detailed information on LD Relay configuration, scaling and performance guidelines and refer to the LD Relay chapter.

Overview

This topic explains the LaunchDarkly Relay Proxy, a small service that runs in your own infrastructure. It connects to LaunchDarkly and provides endpoints to service SDK requests and the ability to populate persistent stores.

Resources and documentation:

Use cases

This table lists common use cases for the Relay Proxy:

| Name | Description |

|---|---|

| Restart resiliency for server-side SDKs | LD Relay acts as an external cache to server-side SDKs to provide flag and segments rules |

| Reduce egress and outbound connections | LD Relay can service initialization or streaming requests from SDKs instead of having them connect to LaunchDarkly directly. In event forwarding mode, LD Relay can buffer and compress event payloads from multiple SDK instances |

| Air-gapped environments and snapshots | LD Relay can load flags or segments from an archive exported via the LaunchDarkly API. Available for Enterprise plans only |

| Reduce initialization latency | LD Relay acts as a local cache for initialization requests |

| Support PHP and serverless environments | LD Relay can service short-lived processes via proxy mode and populate persistent stores for daemon-mode clients |

| Syncing big segments to a persistent store | LD Relay can populate big segment stores with membership information for use with server-side SDKs |

Modes of operation

Proxy Mode: SDKs connect to LD Relay to receive flags and updates.

Daemon Mode: LD Relay syncs flags to a persistent store. SDKs retrieve flags as needed directly from the store and do not establish their own streaming connection

Daemon Mode is used in environments where the SDK can not establish a long-lived connection to LaunchDarkly. This is common in serverless environments where the function is terminated after a certain amount of time or PHP.

Additional features

Event forwarding: LD Relay buffers, compresses, and forwards events from the SDKs to LaunchDarkly

Big Segment Syncing: LD Relay syncs big segment data to a persistent store

Scaling and Performance

Overview

This topic explains scaling and performance considerations for the Relay Proxy.

The computational requirements for LD Relay are fairly minimal when serving server-side SDKs or when used to populate a persistent store. In this configuration, the biggest scaling bottleneck is network bandwidth and throughput. Provision LD Relay as you would for an HTTPS proxy and tune for at least twice the number of concurrent connections you expect to see.

You should leverage monitoring and alerting to ensure that the LD Relay cluster has the capacity to handle your workload and scale it as needed.

Out of the box, LD Relay is fairly light-weight. At a minimum you can expect:

- 1 long-lived HTTPS SSE connection to LaunchDarkly's streaming endpoint per configured environment

- 1 long-lived HTTPS SSE connection to the AutoConfiguration endpoint when automatic configuration is enabled

Memory usage increases with the number of configured environments, the payload size of the flags and segments, and the number of connected SDKs. Client-side SDKs have higher computation requirements as the evaluation occurs in LD Relay.

Event forwarding

LD Relay handles the following event forwarding patterns:

- Approximately 1 incoming HTTPS request every 2 seconds per connected SDK. This may vary based on flush interval and event capacity settings in the SDK.

- Approximately 1 outgoing HTTPS request every 2 seconds per configured environment. This may vary based on LD Relay's configured flush interval and event capacity.

Memory usage increases with event capacity and the number of connected SDKs.

Scaling strategies

Each LD Relay instance maintains connections and manages configuration for the environments you assign to it. The number of environments a single instance can handle depends on your memory, CPU, and network resources. Monitor resource usage to determine when to scale.

When your environment count or size exceeds the limits of a single Relay instance, use one of these scaling approaches:

- Horizontal scaling: Add more Relay instances to share the load across your environments. This approach provides greater resilience and easier dynamic scaling.

- Vertical scaling: Increase the memory and CPU resources allocated to each Relay instance.

- Environment sharding: Distribute environments across multiple Relay instances so each Relay manages a subset of environments rather than all of them.

Environment sharding

Sharding distributes environment configurations across multiple Relay instances. Each Relay manages a subset of environments rather than all of them.

Use sharding in the following situations:

- Your environment count or size exceeds the limits of a single Relay instance.

- You need to seperate instances by failure or compliance domains.

- You need to simplify health checks for load balancers and container orchestrators.

Sharding provides the following advantages:

- Reduces memory and CPU load per Relay instance.

- Limits the impact of failures or configuration errors.

- Improves cache efficiency and stability by isolating workloads.

Seperation of concerns

LD Relay can perform several functions such as acting providing rules to server-side SDKs, evaluating flags for client-side SDKs, forwarding events, and populating persistent stores. You can configure LD Relay to perform one or more of these functions.

You may want consider using seperate LD Relay instances for different functions based on scaling characteristics and criticality. For example you might have seperate clusters for:

- Server-side SDKs

- Client-side SDKs (Evaluation)

- Event forwarding

- Populating persistent stores for daemon-mode or syncing big segments

This approach provides the following advantages:

- Easier to individually scale components and predict resource utilization

- Seperate concerns and increase reliabiity of critical components (e.g serving rules to server-side SDKs is more critical than event forwarding)

- Prevent client-side workloads from impacting server-side SDKs

This approach will generally increase the total cost of ownership of the deployment as you will need to deploy and manage multiple instances. It is more applicable to large deployments with a mix of use-cases.

Proxy Mode

Overview

This topic explains proxy mode configuration for the Relay Proxy.

This table shows recommended configuration options:

| Configuration | Recommendation | Notes |

|---|---|---|

| Automatic configuration | Enable if not using a persistent store for restart or scale resiliency | Automatic configuration allows you to avoid re-deploys when adding new projects or environments or rotating SDK keys. LD Relay cannot start if the automatic configuration endpoint is down even when a persistent store is used. If the ability to restart or add LD Relay nodes while LaunchDarkly is unavailable is critical, do not use automatic configuration |

| Event forwarding | Enable | Allow SDKs to forward events to LD Relay to reduce outbound connections and offload compression |

| Metrics | Enable | Enable at least one of the supported metrics integrations such as Prometheus |

| Persistent Stores | Optional. | Persistent stores can be used to allow the deployment of additional LD Relay instances while LaunchDarkly is unavailable. You may opt instead to provision LD Relay so it can handle at least 1.5x-2x of your production traffic in order to avoid the need to scale LD Relay during short outages. |

| Disconnected Status Time | Optional. | Time to wait before marking an environment as disconnected. The default is 1m and is sufficient for instances with reliable networks. If you are using LD Relay in an unreliable network environment, consider increasing this value |

Here is an example:

[Main]

; Time to wait before marking a client as disconnected.

; Impacts the status page

; disconnectedStatusTime=1m

;; For automatic configuration

[AutoConfig]

key=rel-abc-123

;; Event forwarding when setting the event uri to LD Relay in your SDK

[Events]

enable=true

;; Metrics integration for monitoring and alerting

[Prometheus]

enabled=true

Persistent Stores

Persistent stores can be used to improve reboot and restart resilience for LD Relay in the event of a network partition between your application and LaunchDarkly.

This table shows persistent store configuration options:

| Configuration | Recommendation | Notes |

|---|---|---|

| Cache TTL | Infinite with a negative number, localTTL=-1s | An infinite cache TTL means LD Relay maintains its in-memory cache of all flags, mitigating the risk of persistent store downtime. If the persistent store goes down with a non-infinite cache TTL, you may see partial or invalid evaluations due to missing flags or segments. |

| Prefix or Table name | Use the client-side id | The prefix or table name must be unique per environment. When using autoconfig, the placeholder $CID can be used. This is replaced with the client-side id of the environment. Using the same scheme when statically configuring environments allows for consistency if you switch between these options |

| Ignore Connection Errors | ignoreConnectionErrors=true when AutoConfig is disabled | By default, LD Relay shuts down if it cannot reach LaunchDarkly after the initialization timeout is exceeded. To have LD Relay begin serving requests from the persistent store after the timeout, you must set ignore connection errors to true |

| Initialization Timeout | Tune to your needs, default is 10s | This setting controls how long LD Relay attempts to initialize its environments. Until initialization succeeds, LD Relay serves 503 errors to any SDKs that attempt to connect. What happens when the timeout is exceeded depends on the setting of ignore connection errors. When ignore connection errors is false, which is the default, LD Relay shuts down after the timeout is exceeded. Otherwise, LD Relay begins servicing SDK requests using the data in the persistent store. |

Here is an example:

[Main]

;; You must set ignoreConnectionErrors=true in order for LD Relay to start without a connection to LaunchDarkly. You should set this when using a persistent store for flag availability.

ignoreConnectionErrors=true

;; How long will LD Relay wait for connection to LaunchDarkly before serving requests.

;; If ignoreConnectionErrors is false, LD Relay will exit with an error if it cannot connect to LaunchDarkly within the timeout.

initTimeout=10s;; Default is 10 seconds

;; NOTE: If you are using Automatic Configuration, LD Relay can not start without a connection to LaunchDarkly.

[AutoConfig]

key=rel-abc-123

; When using Automatic Configuration with a persistent store, you must set the prefix using the $CID placeholder.

envDatastorePrefix="ld-$CID"

; if using DynamoDB with a table per environment

;envDatastoreTableName="ld-$CID"

; When not using automatic configuration, set the prefix and/or table name for each environment.

; see https://github.com/launchdarkly/ld-relay/blob/v8/docs/configuration.md#file-section-environment-name

[Redis]

enabled=true

url=redis://host:port

; Always use an infinite cache TTL for persistent stores

localTtl=-1s

[DynamoDB]

enabled=true

tableName=ld

; Always use an infinite cache TTL for persistent stores

localTtl=-1s

SDK Configuration

Configure the endpoints you want to handle using LD Relay. Events must be enabled if you set the events URI. To learn more about configuring endpoints, read Proxy mode.

Infrastructure

-

Minimum 3 instances in at least two availability zones per region.

- On-prem: Consider failure domains in your deployment such as separate racks and power

- Cloud: Follow the guidelines of your hosting provider to qualify for SLAs

-

Highly available load balancer with support for SSE connections

You should target 99.99 percent availability, which matches LaunchDarkly's SLA for flag delivery.

Scaling and performance

In addition to the base scaling and performance guidelines, Proxy Mode has the following considerations:

Server-side SDKs

1 incoming long-lived HTTPS SSE connection per connected server-side SDK instance

Client-side SDKs

- 1 outgoing long-lived HTTPS SSE connection per connected client-side SDK instance with streaming enabled

- 1 incoming HTTPS request per connected client-side SDK every time a flag or segment is updated

LD Relay does not scale well with streaming client-side connections and should be avoided. It can handle polling requests to the base URI without issue. For browser SDKs, you can use LD Relay for initialization and the SaaS for streaming.

Do not point the stream URI of client-side mobile SDKs to LD Relay without careful consideration.

Daemon Mode

Overview

This topic explains daemon mode, a workaround for environments where normal operation is not possible. Avoid using it unless strictly necessary. Daemon Mode is not a solution for increasing flag availability.

- Using Daemon Mode

- Using Redis as a persistent feature store

- Using DynamoDB as a persistent feature store

Guidelines

Set useLDD=true in your SDK configuration

Daemon mode requires that you both enable daemon mode and configure the persistent store. Enabling daemon mode tells the SDK not to establish a streaming or polling connection for flags and instead rely on the persistent store. For SDK-specific configuration examples, see Using daemon mode.

Choose a unique prefix

It is critical that each environment has a unique prefix to avoid corrupting the data. When using autoconfig, you can do this automatically using the $CID placeholder. See the example below.

Restart LD Relay when persistent store is cleared

If the persistent store is missing information, either because updates were lost or the store was cleared, you should start LD Relay to repopulate the data. You may want to consider automatically restarting LD Relay or running an LD Relay instance in one-shot mode every time the persistent store starts.

Choosing a cache TTL for your SDK

The Cache TTL controls how long the SDK will cache rules for flags and segments in memory. Once the cache expires for a particular flag or segment, the SDK fetches it from the persistent store at evaluation time.

This table shows cache TTL options and their trade-offs:

| Option | Resilency | Evaluation Latency | Update Propogation Latency |

|---|---|---|---|

| Higher TTL | Higher | Lower | Higher |

| Lower TTL | Lower | Higher† | Lower |

| No Cache with TTL=0 | Lowest, persistent store must always be available | Highest, all evaluations require one or more network calls | Lowest, updates are seen as soon as they are written to the store |

| Infinite Cache with TTL=-1†† | Highest | Lowest with flags in memory | Updates are never seen by the SDK unless you configure stale-while-revalidate††† |

† In select SDKs such as the Java Server-Side SDK, you can configure stale-while-revalidate semantics so that flags are always served from the in-memory cache and refreshed from the persistent store asynchronously in the background

†† Only supported in some SDKs

††† Unless you have configured a stale-while-revalidate in a supported SDK

LD Relay Configuration

Here is an example:

; If using AutoConfig

[AutoConfig]

key=rel-abc-123

envDatastorePrefix="ld-$CID"

; if using DynamoDB with a table per environment

;envDatastoreTableName="ld-$CID"

[Events]

; Enable event forwarding if using LD Relay as the event uri in your SDK

enable=true

[Prometheus]

enabled=true

[DynamoDB]

; change to true if using dynamodb

enabled=false

; uncomment if using the same table for all environments

;tableName=ld

localTtl=-1s

[Redis]

; Change to true if using redis

enabled=false

url=redis://host:port

localTtl=-1s

;tls=true

;password=

SDK Configuration

In Daemon Mode, you configure both daemon mode and the persistent store in the SDK. To learn more about configuring daemon mode and the persistent store, read Daemon mode.

Scaling and performance

In addition to the base scaling and performance guidelines, Daemon Mode has the following additional characteristics:

In Daemon Mode, you only need one instance of LD Relay to keep the persistent store up to date. Multiple instances do not help scale with the number of connected clients. You should take steps to ensure at least one instance is running.

The persistent store has a read-bound workload and contains the flag and segment rules for your configured environments. The amount of data is typically quite small. The data matches the information returned by the streaming and polling endpoints. Here is how to check the payload size and content:

curl -LH "Authorization: $LD_SDK_KEY" https://sdk.launchdarkly.com/sdk/latest-all | wc -b

If you are using big segments in this environment, all of the user keys for every big segment in configured environments sync to the store. This can cause an increased write workload to the persistent store.

Reverse Proxies

Overview

This topic explains reverse proxy configuration settings for the Relay Proxy.

HTTP proxies such as corporate proxies and WAFs, and reverse proxies in front of Relay such as nginx, HAProxy, and ALB are common in LD Relay deployments. This table lists settings to configure:

| Setting | Configuration | Notes |

|---|---|---|

| Response buffering for SSE endpoints | Disable | It is common for reverse proxies to buffer the entire response from the origin server before sending it to the client. Since SSEs are effectively an HTTP response that never ends, this prevents the SDK from seeing events sent over the stream until the response buffer is filled or the request closes due to a timeout. Relay sends a special header that disables response buffering in nginx automatically: X-Accel-Response-Buffering: no |

| Forced Gzip compression for SSE endpoints | Disable if the proxy is not SSE aware | Gzip compression buffers the responses |

| Response Timeout or Max Connection Time for SSE Endpoints | Minimum 10 minutes | Avoid long timeouts because while the SDK client reconnects, you can end up wasting resources in the load balancer on disconnected clients. |

| Upstream or Proxy Read Timeout | 5 minutes | The timeout between successful read requests. In nginx, this setting is called proxy_read_timeout |

| CORS Headers | Restrict to only your domains | Send CORS headers restricted to only your domains when using LD Relay with browser SDK endpoints |

| Status endpoint | Restrict access | Restrict access to this endpoint from public access |

| Metrics or Prometheus port | Restrict access | Restrict access to this port |

AutoConfig

Overview

This topic explains AutoConfig for the Relay Proxy, which allows you to configure Relay Proxy instances automatically.

Custom Roles

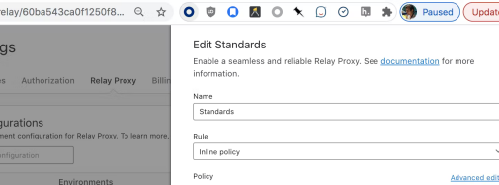

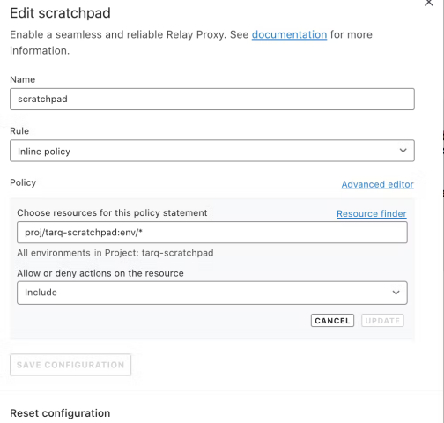

These custom role policies allow members to create and modify LD Relay AutoConfigs.

All instances

For an LD Relay Admin type role with access to all LD Relay instances:

Here is an example:

[

{

"effect": "allow",

"resources": ["relay-proxy-config/*"],

"actions": ["*"]

}

]

Specific instance by ID

Here is an example:

[

{

"effect": "allow",

"resources": ["relay-proxy-config/60be765280f9560e5cac9d4b"],

"actions": ["*"]

}

]

You can find your auto-configuration ID from the URL when editing its settings or using the API:

Using AutoConfig

Selecting Environments

You can select the environments to include in a Relay Proxy configuration.

Examples

Production environment in all projects

Here is an example:

[

{

"actions": ["*"],

"effect": "allow",

"resources": ["proj/*:env/production"]

}

]

Production environment in foo project

[

{

"actions": ["*"],

"effect": "allow",

"resources": ["proj/foo:env/production"]

}

]

All environments in projects starting with foo-

[

{

"actions": ["*"],

"effect": "allow",

"resources": ["proj/foo-*:env/*"]

}

]

Production in projects tagged "bar"

[

{

"actions": ["*"],

"effect": "allow",

"resources": ["proj/*;bar:env/production"]

}

]

All non-production environments in any projects not tagged federal Deny has precedence within a single policy

[

{

"actions": ["*"],

"effect": "allow",

"resources": ["proj/*:env/*"]

},

{

"actions": ["*"],

"effect": "deny",

"resources": ["proj/*:env/production", "proj/*;federal:env/*"]

}

]